We've been working on our customizable portal software discoverize for about two years now using Orchard CMS. From the beginning we were convinced to use Specification By Example to build up a live documentation of the functionality of our software. This has been very important to us since we plan to drive tens if not hundreds of portals using the same code base.

Last year, I've already written about how we do our integration testing. I've also written about why we do browser based testing as opposed to some lower level testing that is in place e.g. in the Orchard.Specs project inside the Orchard source solution. But here we are, a year has passed, and we're still not happy with our approach.

Problems we're facing

The biggest problem is really that to write an acceptance test for a new feature takes nearly as much time as it takes for the feature to implement. This might be tolerable for mission critical software used in banks or space shuttles, but it's just over the top for a consumer website. On the other hand, we want an insurance that the software we ship contains as few bugs as possible.

Development speed down by 50%

The websites we generate using our software are quite complex and interactive. This has repeatedly posed challenges on writing robust browser based tests. We chose Coypu over Selenium because it has a cleaner API and handles asynchronous postbacks really well, but its API has still been limiting to us so we regularly find ourselves hacking around those limitations. All of which has to be tested, of course, which takes a noticeable amount of time.

Another problem we face is that we need to change our HTML to accommodate for testing. For example, we keep adding id attributes to elements just so we have an easy and reliable way of accessing those elements in our specification tests. This seems not right but that's how we get stuff to work.

Test Execution speed too high for continuous feedback

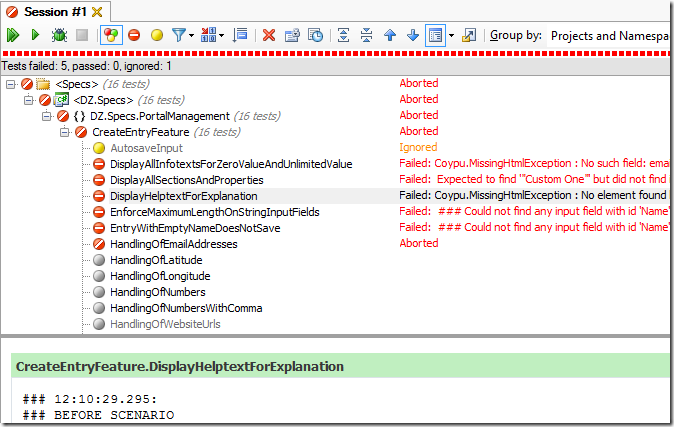

The spec test execution time is too high. We're talking about 50-90 seconds per test case if they pass, add another 20-30 secs if they fail (because of the browser automation timeouts). That's a real bummer because it's so easy to loose focus during that time. Additionally, before executing a test suite (or a single test, if that's what you want) we compile and publish our code to a separate destination which the specs run on. This process takes another 45-50 secs which is ok if you run all specs at once but adds significant overhead when working on a single acceptance test.

Related to this, we keep having trouble in quickly finding the cause for a broken test because not all of our commits are being pushed through the acceptance tests pipeline since a single run sometimes takes longer than the time between commits. This makes finding the cause for a breaking test harder.

Test Fragility keeps us busy

Another reoccurring problem are breaking tests due to UI changes. This might be a simply change of CSS, HTML or a JavaScript snippet, but it happens all the time. Also, there are usually at least a couple of tests that break simultaneously because they reuse certain steps which is not only annoying but often misleading as to where the error really comes from.

Demoralization

All of the above lead to decreases morale both in writing new tests and in fixing broken ones. Which in turn adds even more overhead to the development process.

Looking for success stories

This post came into existence because we believe in Specification by Example and we also believe that other teams are successfully running integration tests, even by the use of an automated browser. If you're part of such a team, or have any other valuable feedback to share, please do so in the comments.

Happy testing!